There are several pitfalls when setting up an ECS cluster and these can be quite hard to debug. I made this post to point out some of these pitfalls to get you started. If you create your cluster and instances through the AWS console it will do some of these steps for you in the background.

IAM Permissions

For the permissions of the EC2 instance it needs the following IAM role:

Trust policy:

resource "aws_iam_role" "execution_role" {

name = "ecs-ec2-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = "ec2.amazonaws.com"

}

}

]

})

}It needs to have the standard policy AmazonEC2ContainerServiceforEC2Role attached because it needs to be able to talk to the ECS API (this can either be via an VPC endpoint for ECS or a NAT or Internet Gateway):

resource "aws_iam_role_policy_attachment" "ecs_task_permissions" {

role = aws_iam_role.execution_role.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEC2ContainerServiceforEC2Role"

}We need to attach this to an instance profile so we can attach it to a launch template later on:

resource "aws_iam_instance_profile" "instance_profile" {

name = "instanceprofile"

role = aws_iam_role.execution_role.name

}EC2 instances

For the EC2 instances we can use the SSM parameter that points to the most recent AMI for the ECS optimized instance:

data "aws_ssm_parameter" "ami" {

name = "/aws/service/ecs/optimized-ami/amazon-linux-2/recommended/image_id"

}Let’s create a security group that the EC2 instances can use:

module "security_group" {

source = "terraform-aws-modules/security-group/aws"

name = "ec2-ecs-security-group"

vpc_id = "vpc-123"

ingress_with_source_security_group_id = []

egress_rules = ["all-all"]

}Note that tasks on the instance have their own ENI’s so they get their own security groups as well (depending on the network mode).

Now we can create a launch template for the autoscaling groups, note that we need to register the EC2 instance with the cluster through the userdata (otherwise the EC2 instance will not appear under your cluster management):

resource "aws_launch_template" "launchtemplate" {

name = "template"

block_device_mappings {

device_name = "/dev/sda1"

ebs {

volume_size = 60

encrypted = true

delete_on_termination = true

}

}

update_default_version = true

iam_instance_profile {

arn = aws_iam_instance_profile.instance_profile.arn

}

image_id = data.aws_ssm_parameter.ami.value

instance_type = "m6a.4xlarge"

monitoring {

enabled = true

}

vpc_security_group_ids = [

module.security_group.security_group_id

]

user_data = base64encode(

<<EOF

#!/bin/bash

echo "ECS_CLUSTER=clustername" >> /etc/ecs/ecs.config

EOF

)

}Now we can create an autoscaling group for the EC2 instances:

resource "aws_autoscaling_group" "asg" {

name = "asg"

desired_capacity = 0

max_size = 1

min_size = 0

protect_from_scale_in = true

// list of subnet ids to launch the instances in (private subnets)

vpc_zone_identifier = ["subnet-123"]

launch_template {

id = aws_launch_template.launchtemplate.id

version = "$Latest"

}

}Remember, the EC2 instances that this autoscaling group launches need access to the ECS API to register and be found!

ECS details

We can now link the capacity provider to the ECS cluster through the autoscaling group:

resource "aws_ecs_capacity_provider" "cp" {

name = "EC2"

auto_scaling_group_provider {

auto_scaling_group_arn = aws_autoscaling_group.asg.arn

managed_termination_protection = "ENABLED"

managed_scaling {

status = "ENABLED"

target_capacity = 100

}

}

}Finally we can setup the cluster:

resource "aws_ecs_cluster" "ecs" {

depends_on = [

aws_ecs_capacity_provider.cp

]

name = "clustername"

setting {

name = "containerInsights"

value = "enabled"

}

}And attach the capacity provider:

resource "aws_ecs_cluster_capacity_providers" "providers" {

cluster_name = aws_ecs_cluster.ecs.name

capacity_providers = [

"FARGATE", "FARGATE_SPOT", "EC2"

]

}You can now launch instances to the new capacity provider and it should scale in and out automatically based on container demands.

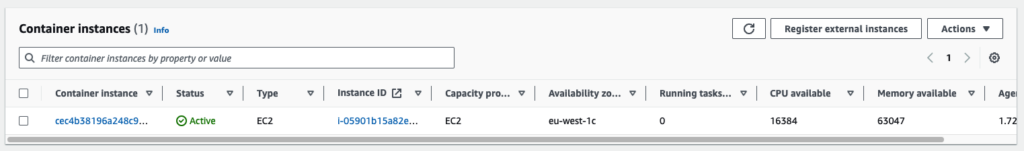

When you launch a task, then the autoscaling group will scale up automatically and register the container instance to the cluster:

AWS will place the task on the EC2 instance and run it there. Note that it will take some time for AWS to scale the EC2 instance in (about 15 minutes) if there are no tasks on it anymore. This works through the tracking of the CapacityProviderReservation alarm. For a more detailed description on ECS Cluster scaling check out this detailed article from AWS.

You can find the full source code on GitHub.

The Terraform registry has some modules that deal with this complexity but they are quite broad. This setup is great if you already have a Fargate cluster setup and want to add EC2 instances to the pool. One of these modules you can find here.