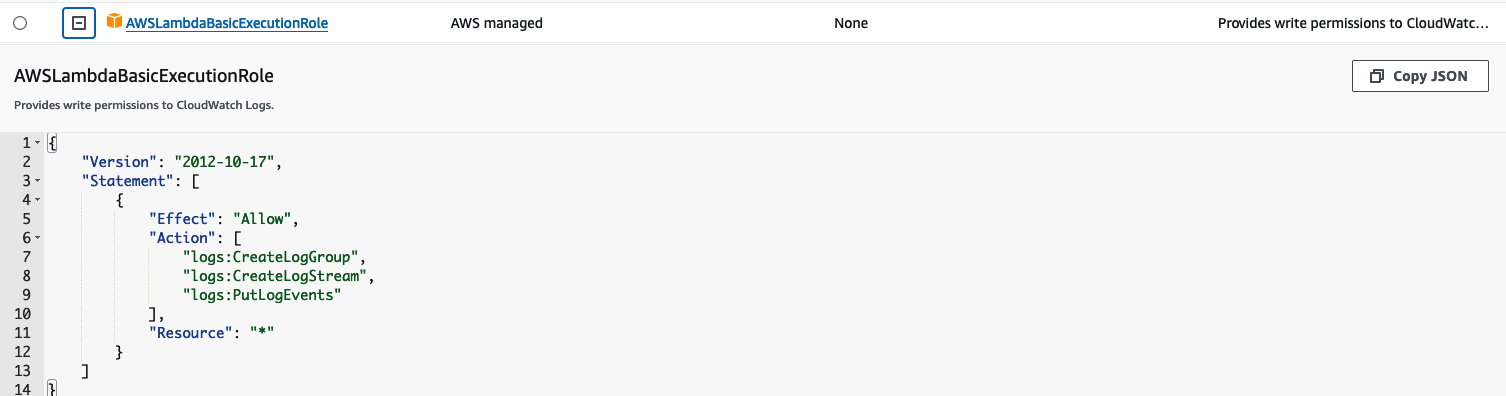

AWS has a lot of poor practices on their website. For instance if you look at the default Lambda execution role:

It gives the following permissions:

- logs:CreateLogGroup – Able to create the log group, but can create all log groups

- logs:CreateLogStream – Can create streams in all log groups

- logs:PutLogEvents – Can put logs to all log streams in your account

The last two are needed to create log entries (and their parent streams). However they should not be wildcarded. Furthermore using the wildcard operator means that if your Lambda function gets compromised it can write logs in all log groups (and overflow them to make intrusion detection harder).

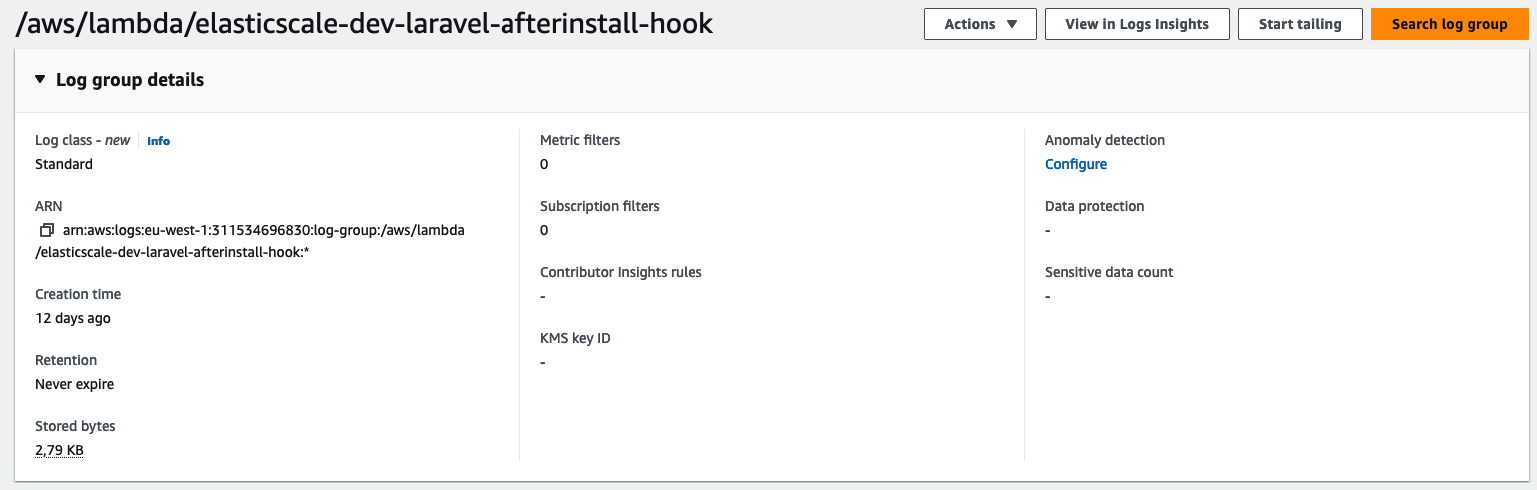

Next to that there is a more annoying problem:

The log groups are created without any expiration date and without KMS encryption. Meaning that the logs are not encrypted at rest and you will build up logs indefinitely. Also, since the log group is now managed outside your IAAC code for instance Terraform, you will get an error when you try to recreate this log group later on. So you can never put it under management without importing it first.

In summary: Always create your own log groups and make sure your IAM policies only allow access within that log-group and it’s streams.

In terraform this could look something like this:

resource "aws_cloudwatch_log_group" "cloudtrail" {

name = "/aws/codebuild/${aws_codebuild_project.codebuild.name}"

kms_key_id = var.cloudwatch_kms_key_id

retention_in_days = 14

}

data "aws_iam_policy_document" "codebuild" {

statement {

effect = "Allow"

actions = [

"logs:CreateLogStream",

"logs:PutLogEvents",

]

resources = [

"${aws_cloudwatch_log_group.cloudtrail.arn}:log-stream:*",

]

}

}Note that the log-stream:* selector is necessary because the wildcard operator does not cover those. Ofcourse, the IAM role must have access to use the KMS key through it’s KMS key policy.

Confused Deputy Problem

Also, whenever you see the following trust policy (the service does not matter), you should see red flags immediately:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codebuild.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}This policy grants AWS CodeBuild access to the role. But it does not restrict this to a specific account or source ARN. You always need to lock these trust policies down for instance like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codebuild.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"aws:SourceAccount": [

"account-ID"

]

}

}

}

]

}Or even better, using the CodeBuild project name (aws:SourceArn can be something else, like the name of a Lambda function if the service is lambda.amazonaws.com):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codebuild.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals": {

"aws:SourceArn": "arn:aws:codebuild:region-ID:account-ID:project/project-name"

}

}

}

]

}An added benefit of specifying the aws:SourceArn is that this includes the account ID in the ARN at the same time. So you should always try to lock it down to a specific set of ARN’s that can assume the role.

This protects you against the so called confused deputy problem, which can become a problem if an AWS service gets compromised internally.

If you want to wildcard the project name, StringEquals will not work but you need to use StringLike:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codebuild.amazonaws.com"

},

"Action": "sts:AssumeRole",

"Condition": {

"StringLike": {

"aws:SourceArn": "arn:aws:codebuild:region-ID:account-ID:project/whatever-*"

}

}

}

]

}AWS documentation kind of sucks on this front, because they mention the confused deputy problems on some pages, but not on all pages.

Note that these aws:SourceArn and aws:SourceAccount are not being set by all services. Notably CodePipeline does not set them and can not assume a role that has these criteria set.

Debugging IAM policy issues

The easiest and quickest way to debug a Policy issue with vague error messages, is to use CloudTrail to debug it.

For instance, I got the following error when using an S3 bucket together with CodePipeline: Insufficient permissions: The provided role does not have permissions to perform this action. This error is not helpful at all. It does not tell you what permissions it is missing.

To debug this, create a temporary Cloudtrail trail and then enable data events. Trigger the error from the console again and watch out for IAM calls that fail with an errorMessage. In my case it was missing the s3:ListBuckets call. The total permission that worked was (I also have a scripts bucket next to the CodePipeline bucket):

data "aws_iam_policy_document" "codepipeline_policy" {

statement {

effect = "Allow"

actions = [

"s3:PutObject*",

"s3:List*",

"s3:GetObject*",

"s3:GetBucketVersioning",

"s3:GetBucketPolicy"

]

resources = [

aws_s3_bucket.codepipeline_bucket.arn,

"${aws_s3_bucket.codepipeline_bucket.arn}/*"

]

}

statement {

effect = "Allow"

actions = [

"s3:PutObject*",

"s3:List*",

"s3:GetObject*",

"s3:GetBucketVersioning",

"s3:GetBucketPolicy"

]

resources = [

aws_s3_bucket.scripts_bucket.arn,

"${aws_s3_bucket.scripts_bucket.arn}/*"

]

}

statement {

effect = "Allow"

actions = [

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:Encrypt",

"kms:DescribeKey",

"kms:Decrypt"

]

resources = [

var.s3_kms_key_id

]

}

statement {

effect = "Allow"

actions = ["codestar-connections:UseConnection"]

resources = [var.codestar_connection_arn]

}

statement {

effect = "Allow"

actions = [

"codebuild:BatchGetBuilds",

"codebuild:StartBuild",

]

resources = [

"arn:aws:codebuild:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:project/${var.prefix}-${var.environment}-${local.apply_name}-*",

"arn:aws:codebuild:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:project/${var.prefix}-${var.environment}-${local.destroy_name}-*",

"arn:aws:codebuild:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:project/${var.prefix}-${var.environment}-${local.plan_name}-*",

]

}

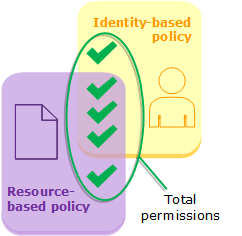

}It might also be your KMS key that blocks permissions, it will give the same error. The key policy must allow access and the IAM role must also grant access. Remember this AWS diagram when working with resource based policies (KMS) and IAM identity-based policies (roles or users):

Also when you create IAM policies, create them in the AWS console editor first, then you will get an helpful error message. If you for instance add a call that can’t be scoped to a resource, you will see an error. And remember, that IAM is eventually consistent. So it might work, but on the second attempt it stops working, so test it multiple times!