Sometimes you need to be able to get a shell in an ECS container (ie. bash). Mostly this is to debug some issue in the container. Before 2021 this was practically impossible until AWS launched ECS Exec.

How does AWS Exec get you access to a shell?

AWS Exec works through the AWS Systems Manager API endpoints. This means that you do not have to open inbound ports for this service to work. It can connect to ECS Fargate tasks and EC2 instances. You need to make sure your task is able to reach the Systems Manager API endpoints. Either through a NAT Gateway or a VPC endpoint. In addition the HTTPS port needs to be open on the outbound security group rules.

You can read the official documentation here.

Unprepared tasks (not ready for a shell)

I needed shell within the n8n container to debug an issue and pull some logs from the EFS file-system. You can read the blog about the n8n setup here.

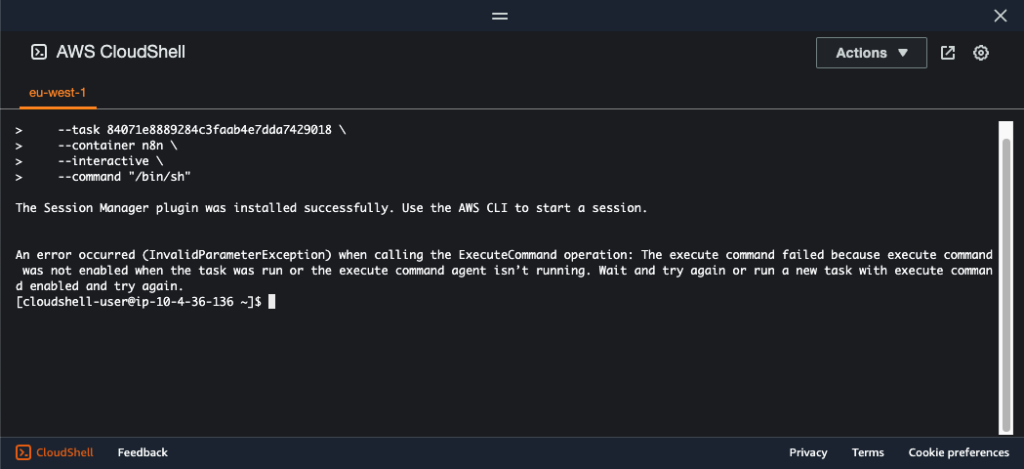

Connecting to an unprepared container with the AWS Cloudshell will yield an error.

AWS Cloudshell has the Session Manager plugin for the AWS CLI installed:

If you want to run this command from your local machine, you must have installed the AWS CLI and the Session Manager plugin.

We need the following input parameters for the command, after that we can get a shell in our ECS task:

- The cluster name

- The task ID can be found by list-tasks command or by navigating to the AWS Console and copying the ARN of the task

- The name of the container we want to connect to (check your task definition)

- The command to the shell (mostly /bin/sh but could also be /bin/bash, depends on your container)

Running the command on an unprepared task will result in an error:

aws ecs execute-command --cluster n8n-cluster \

--task 84071e8889284c3faab4e7dda7429018 \

--container n8n \

--interactive \

--command "/bin/sh"The error will be:

An error occurred (InvalidParameterException) when calling the ExecuteCommand operation: The execute command failed because execute command was not enabled when the task was run or the execute command agent isn’t running. Wait and try again or run a new task with execute command enabled and try again.

Starting the task with the ECS Exec Capability

By default new tasks launch without the ECS Exec Capability, you must specify this when starting the service or task. This is done through the –enable-execute-command flag, and it can be passed in to create-service, update-service, run-task and start-task.

So we can update our service to enable the execute command:

aws ecs update-service \

--cluster n8n-cluster \

--task-definition arn:aws:ecs:eu-west-1:875424272104:task-definition/n8n-taskdef:4 \

--enable-execute-command \

--service n8n-serviceThis will update the service to include the execute capability when it starts new tasks. The existing task must first be stopped, as it has been launched without the shell capability.

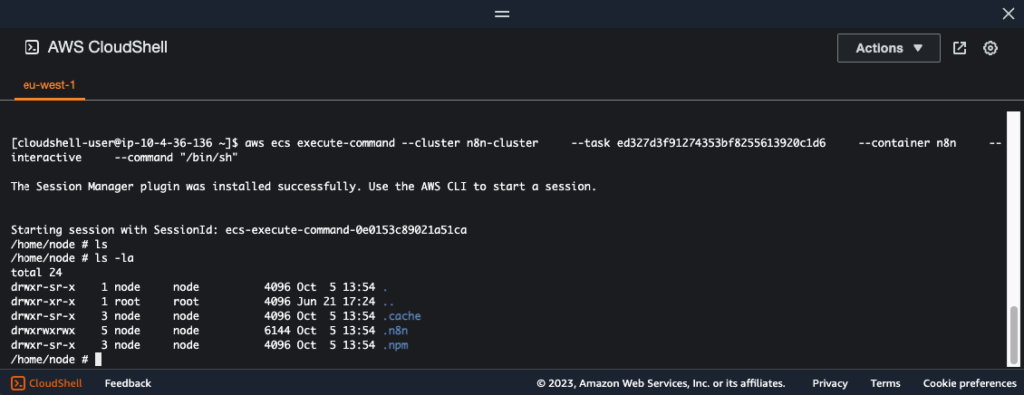

After stopping the task in the service and letting the AWS Service spawn a new task, we see we can connect to the service by running it with the new Task ID:

aws ecs execute-command --cluster n8n-cluster \

--task ca34fe000aa448018b8216a5c829d936 \

--container n8n \

--interactive \

--command "/bin/sh"And then we should be good to go right? Of course we will end up Yak Shaving when we get this error:

An error occurred (TargetNotConnectedException) when calling the ExecuteCommand operation: The execute command failed due to an internal error. Try again later.

Fixing the IAM permissions of the task role

This error is related to the task role of the task. The task role itself needs permission to communicate to the SSM endpoints. This policy should cover it:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel"

],

"Resource": "*"

}

]

}Attach the policy to the IAM role. Make sure to attach it to the task role and not to the task execution role. You must restart the task again because the ECS task registers with the SSM endpoint when it starts up. After starting the new task up again, we can now finally connect to our container:

aws ecs execute-command --cluster n8n-cluster \

--task ed327d3f91274353bf8255613920c1d6 \

--container n8n \

--interactive \

--command "/bin/sh"Here is what a successful connection looks like, we now have a shell within our ECS task!

Not all containers have a shell built in. You then might run into the following error:

Unable to start command: Failed to start pty: fork/exec /bin/bash: no such file or directory

You can try another shell like /bin/sh or /bin/csh. You do not need other special software inside the container to get a shell. You just need the entry point to the shell command.

If your image does not have a shell, you can change a Docker file to include it with the COPY command. The cloudflared docker image or Vault docker image have no shell included in the image. You can use busybox to add the needed shell in your Dockerfile:

FROM cloudflare/cloudflared:latest # add busybox FROM busybox WORKDIR / COPY --from=0 ./ ./

Afterwards you can access the container with the regular method. Also check out our module that can copy Docker Hub public images to ECR. It includes an option to include extra Dockerfile lines.